How To Know When Your A/B Test Is Reliable

https://docs.convertize.io/fr/docs/combien-de-visiteurs-par-mois-necessaires-pour-obtenir-des-resultats-significatifs/Your A/B test is reliable when you have STATISTICALLY SIGNIFICANT and REPRESENTATIVE results.

A test is said to be “significant” when the results are unlikely to have occurred by chance. Achieving statistical significance can take time, and it is the main reason why some tests run longer than expected. Usually, people set their CONFIDENCE LEVEL at 95%. Test results that have achieved statistical significance at a 95% confidence level would occur due to random chance 5% of the time (1 in every 20 similar experiments).

However, there is another thing your tests have to achieve before they are reliable. Because you are performing your test on a sample, it has to be representative. That means you should not test for too long or less than a week.

When it comes to analysing how reliable your A/B tests are, Convertize does all the heavy lifting for you. However, if you want to calculate the significance of a test manually, Convertize offers a free A/B testing significance calculator. To explore all the technical terms in depth, you can read our guide to A/B testing statistics.

How Long Should You Run An A/B Test?

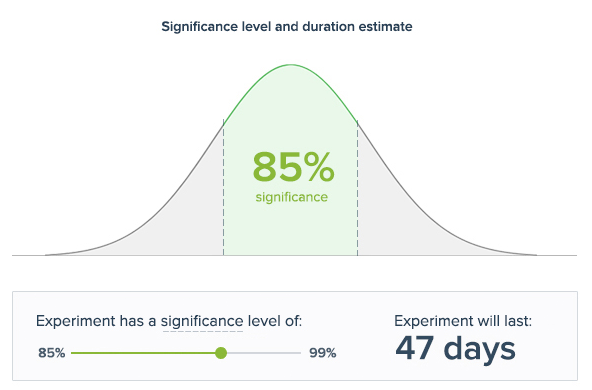

Unless your A/B test has reached statistical significance, there is a chance that your winning page is no better than the other one. Because of this, it is important to run an A/B test until it reaches significance at your confidence level.

However, a test that has been running for months and months is likely to produce less reliable data due to cookie deletion. You also need to avoid overly brief A/B tests because natural variations in your traffic usually occur over the course of a week.

With these conditions in mind, there is an optimal time window within which significant test results are reliable. We recommend running a test for between one week and one month.

The Minimum Sample Size Required For Reliable A/B Tests

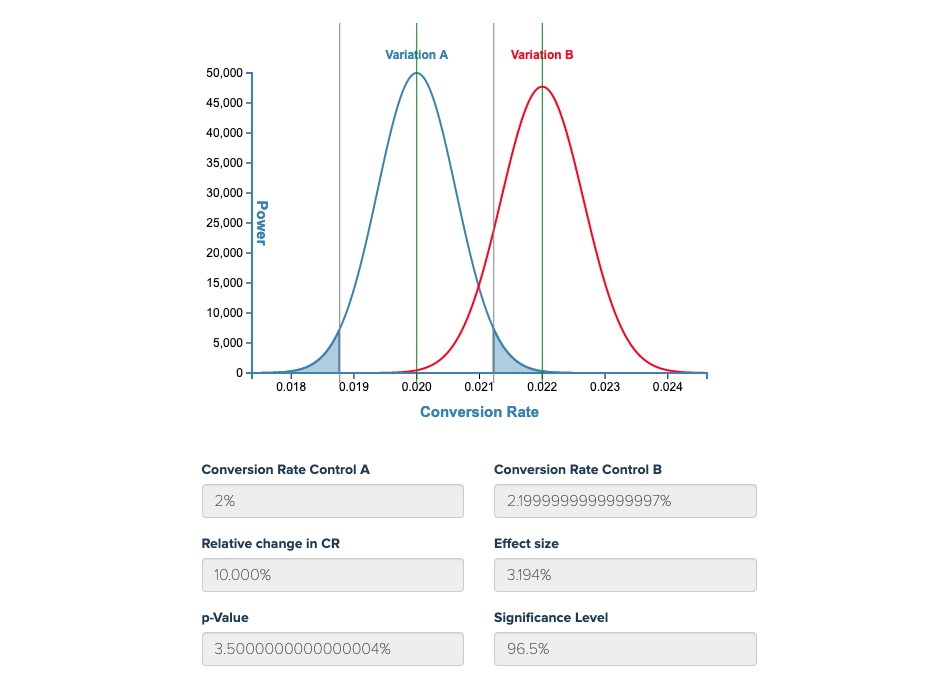

To calculate the A/B testing sample size required for your test, you need to know the CONVERSION RATE for your original page and the EFFECT SIZE of your new scenario. You also need to set a confidence level that will be used to determine if your results are significant.

Your effect size is the magnitude of the difference produced by your new scenario. In website optimization this is usually your CONVERSION RATE INCREASE or “UPLIFT” (the percentage increase in your conversion rate).

It is important to remember that an uplift of 25% is much greater if your original conversion rate is high (for example, webpage 1 at 16%) than it is if your original conversion rate is average (webpage 2 at 4%).

For webpage 1, your new conversion rates would be…

- Version A (the control) 16%

- Version B (the new page, with 25% uplift) 20%

For webpage 2, with a lower original conversion rate, the same uplift is actually a much smaller increase…

- Version A (the control) 4%

- Version B (the new page, with 25% uplift) 5%

If you know in advance what the effect size of your experiment is likely to be, you can calculate the sample size required to achieve A/B test significance.

Convertize Shows You When Your Tests Are Reliable

In most cases, the effect size that your changes will produce is unknown until midway through the test. That’s why Convertize predicts the days required your experiment to achieve significance at the same time as calculating your Uplift and the Significance of your test.

These are some A/B testing scenarios, based on common parameters…

- CR 3% Uplift 15% Confidence Level 95% Minimum Sample Size = 19056

- CR 10% Uplift 15% Confidence Level 95% Minimum Sample Size = 5272

- CR 10% Uplift 5% Confidence Level 95% Minimum Sample Size = 45499

For a step-by-step guide to running A/B tests, read this Introduction to A/B testing.

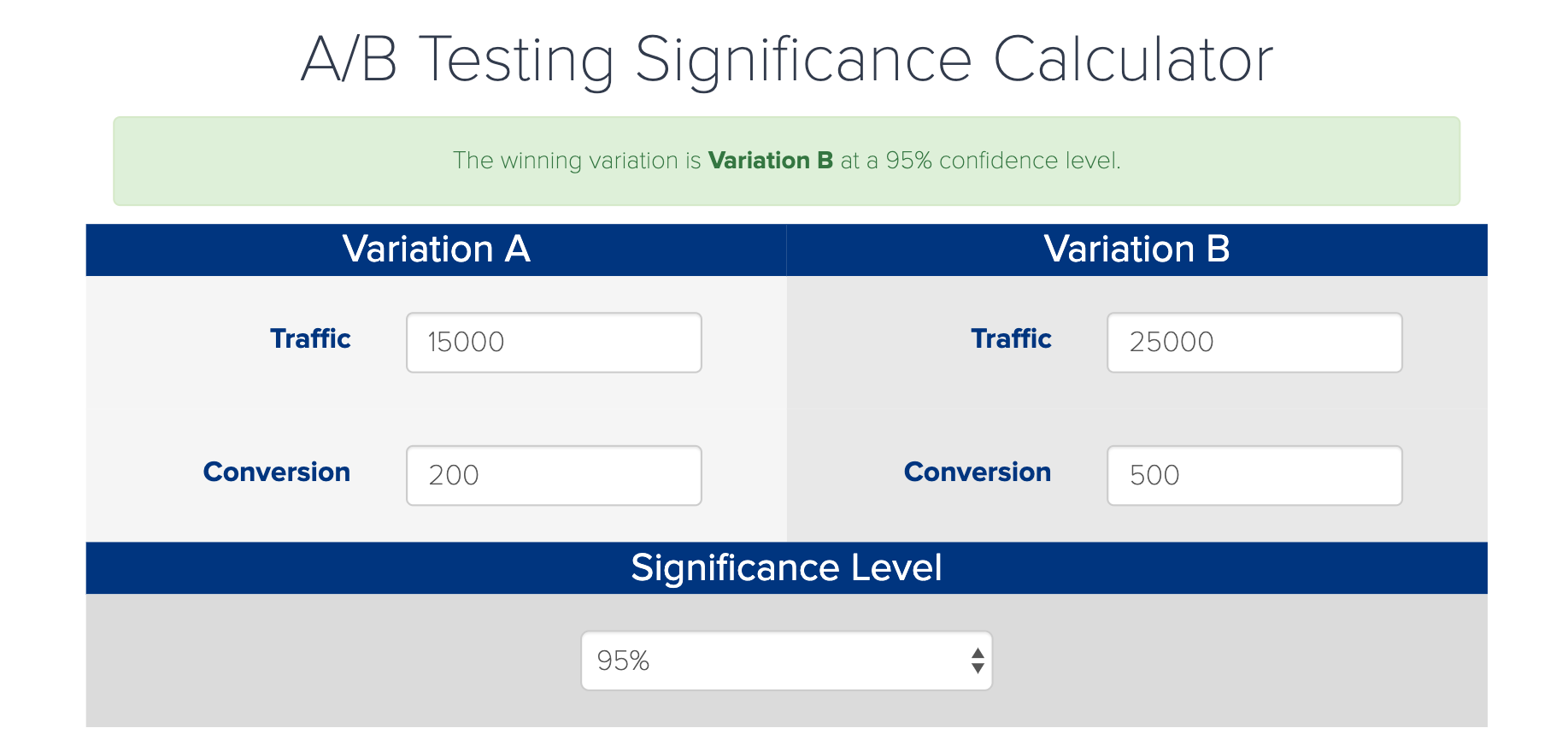

Calculating Your A/B Test’s Significance

If you have performed an A/B test and you want to calculate the significance of the results yourself, you can use this simple AB testing calculator. All you need to know is the number of visitors your pages have received and the number of conversions they have produced. The calculator assumes a confidence level of 95% (as this is the industry standard).

More Help With AB Test Significance

One of the benefits of using Convertize is that you can A/B test your webpages safely, regardless of how much traffic you have. The built-in library of A/B testing ideas gives you hundreds of suggestions for different things to test and the Autopilot actively manages your traffic, so you don’t lose conversions whilst you test.