Why do I have to wait for a reliable result?

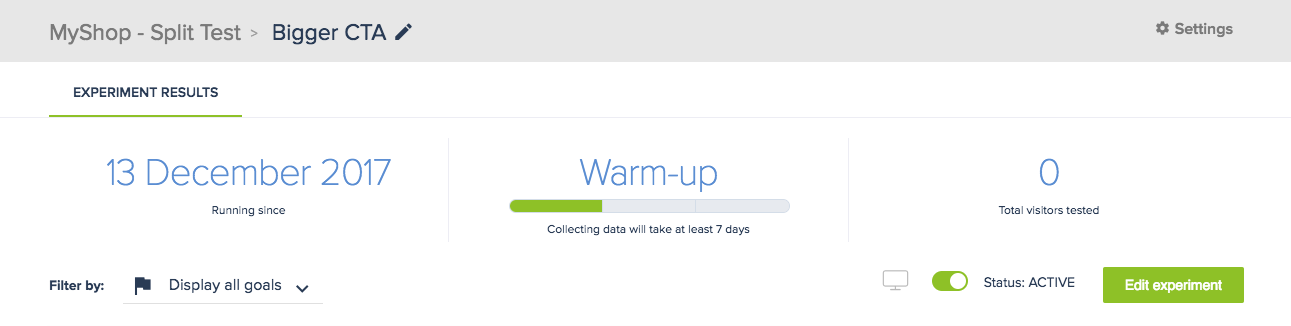

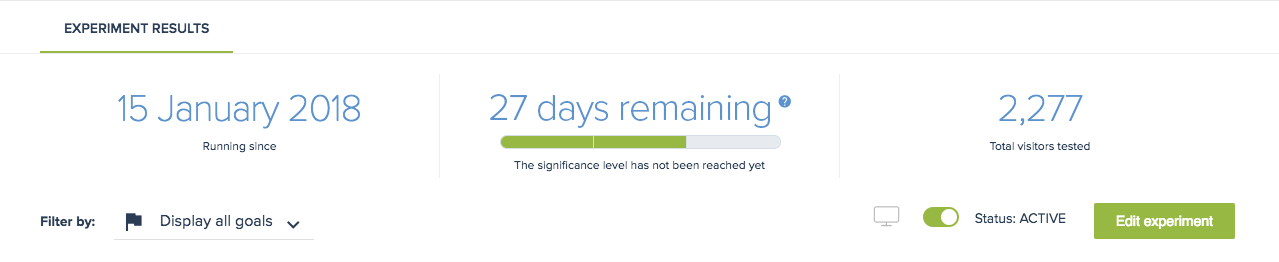

https://docs.convertize.io/fr/docs/pourquoi-faut-il-attendre-d-avoir-des-resultats-fiables/After launching an experiment, the results page (image below) will show you the following information:

- The start date of your experiment

- The phase your experiment is in or estimated time before a significant result is reached

- The number of visitors tested

Warm-up phase

Every experiment begins in the “Warm-up” phase, a period of at least 7 days in which data is collected. After this, you experiment may continue collecting data. The minimum time for the warm-up is set to 7 days, so that data can be collected across all days (e.g. Saturdays can give very different results to Tuesdays).

After enough data has been collected, the platform will display an estimation of how long it will take before a statistically significant result is reached (shown below) based on the significance level and how much traffic your website receives.

Even if you have noticed that one variation of your experiment has had the highest conversion rate for multiple consecutive days, you should still wait until the length of time indicated on your experiment timer.

Convertize will calculate the length of time needed for a statistically significant (or reliable) result based on your traffic and the significance level. If you decide to implement best performing variation before the result has been confirmed as significant, you could be acting on incorrect information.

What is statistical significance?

To understand this measure, we have to delve into how statistics are used to draw conclusions from testing. When users run an AB test, Convertize receives data from all visitors to your website. We then use that information to confirm or deny our hypothesis about all future visitors.

Let’s say we run a test with the hypothesis: “Having a bigger ‘Buy Now’ button will increase my conversion rate.” We then use our sample (all visitors for the duration of the test) to work out whether a bigger “Buy Now” button increases conversions.

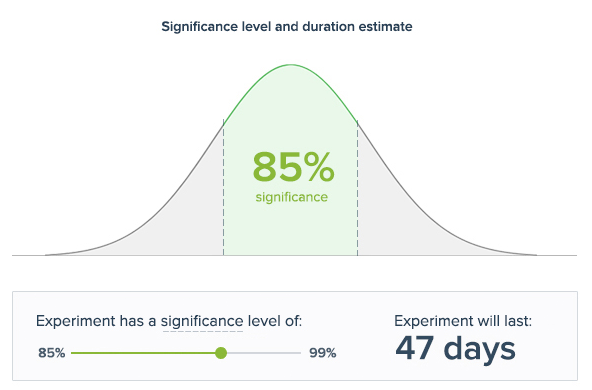

Because a hypothesis is an educated guess, it is susceptible to error. In the graph below, the likelihood of error is represented by the greyed out sections. If you see a result that is unexpected – say that a bigger “Buy Now” button leads to a lower conversion rate – it could be a result outside of the significance level of 85%.

But if you adjust the significance level to 95%, the widely accepted standard for significance, the chance of an incorrect result is reduced whilst the length of the experiment increases.

I’ve received a Smart Recommendation – what should I do?

If Convertize detects that there has been little change between the first and second best performing variants in the ranking for a fixed period of time, it is highly likely the current winner will prove to be statistically significant.

Because of this, the platform will recommend you implement it rather than wait for the experiment to conclude. This is designed to save you time – however, you can choose to ignore the Smart Recommendation and wait until the platform confirms a fully statistically significant result has been reached.